Abstract

Vision Language Models (VLMs) have achieved remarkable success, particularly with "think-with-image" paradigms that enhance reasoning by actively image zooming to explore visual details, moving beyond reliance on purely textual thought processes. However, this approach presents a challenge in balancing performance with efficiency, as proactive zooming incurs massive computational costs and may impair global understanding. To address this problem, we introduce adaptive chain-of-focus (Adaptive-CoF), a framework that teaches VLMs to perform visual search and zooming only when necessary, based on obtained visual cues and the given questions, achieving efficient multimodal reasoning. We enable this capability through a two-stage pipeline: (1) supervised fine-tuning on an introduced MM-Adaptive-CoF SFT dataset that is constructed by a visual search agent with multi-step reasoning trajectories under diverse resolutions and question complexities, and (2) reinforcement learning with an adaptive group-aware reward (AGAR) on MM-Adaptive-CoF RL dataset, allowing the model to master an adaptive strategy. Our experiments show Adaptive-CoF achieves superior performance with exceptional efficiency. On the V* benchmark, it reduces zoom-in operations by 75% compared to proactive models and achieves comparable even better accuracy with nearly 50% fewer tokens, establishing a new paradigm for efficient and accurate VLMs.

Method

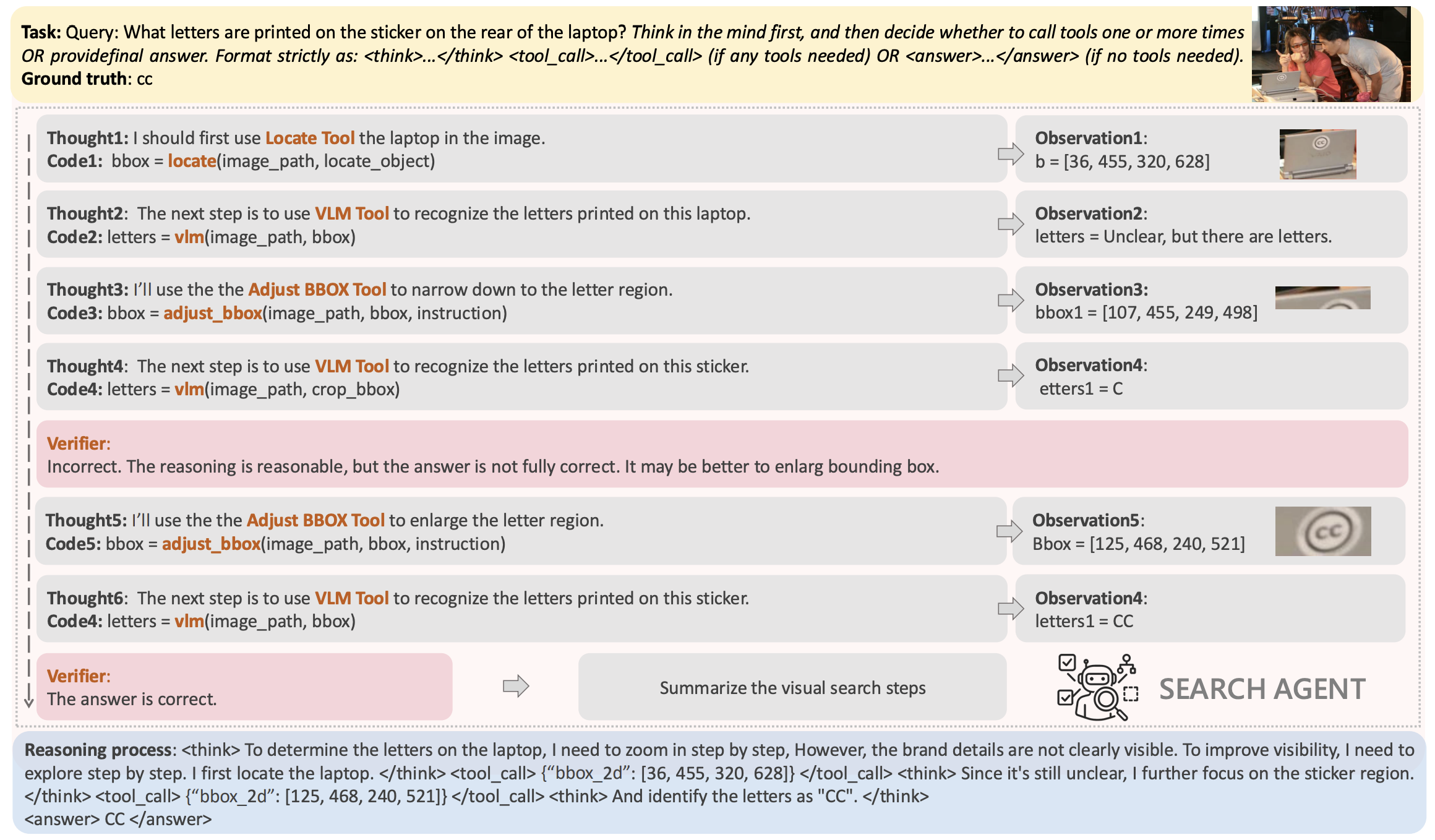

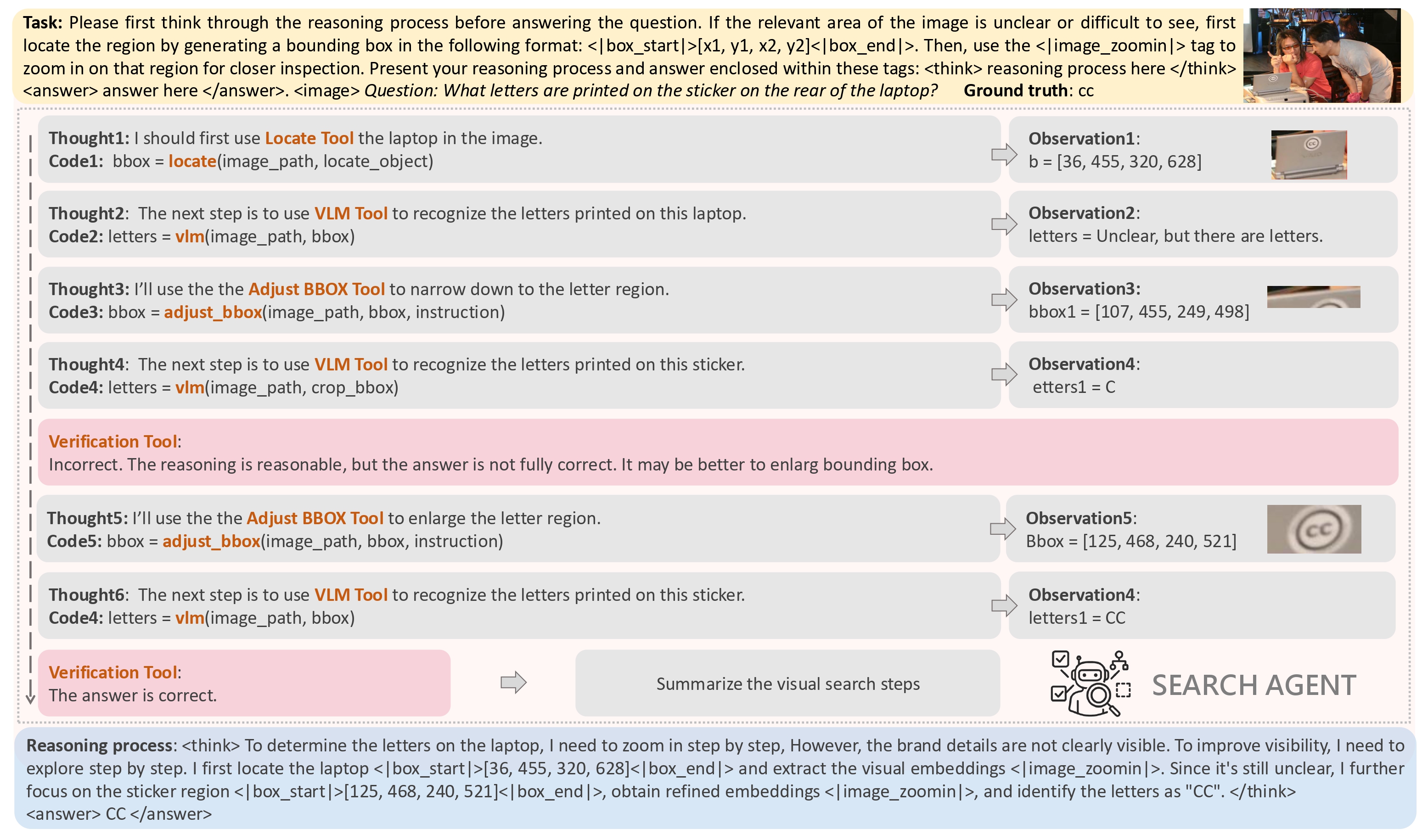

We build a visual search agent based on the ReAct framework, which solves problems iteratively through a Thought-Action loop. When executing visual search tasks, the agent employs a specific strategy of progressive $\text{zoom-in}$ or refinement via tool execution (e.g., image processing or focusing operations). This method enables the agent to acquire more precise, authentic, and valuable search data to guide subsequent reasoning and decision steps.

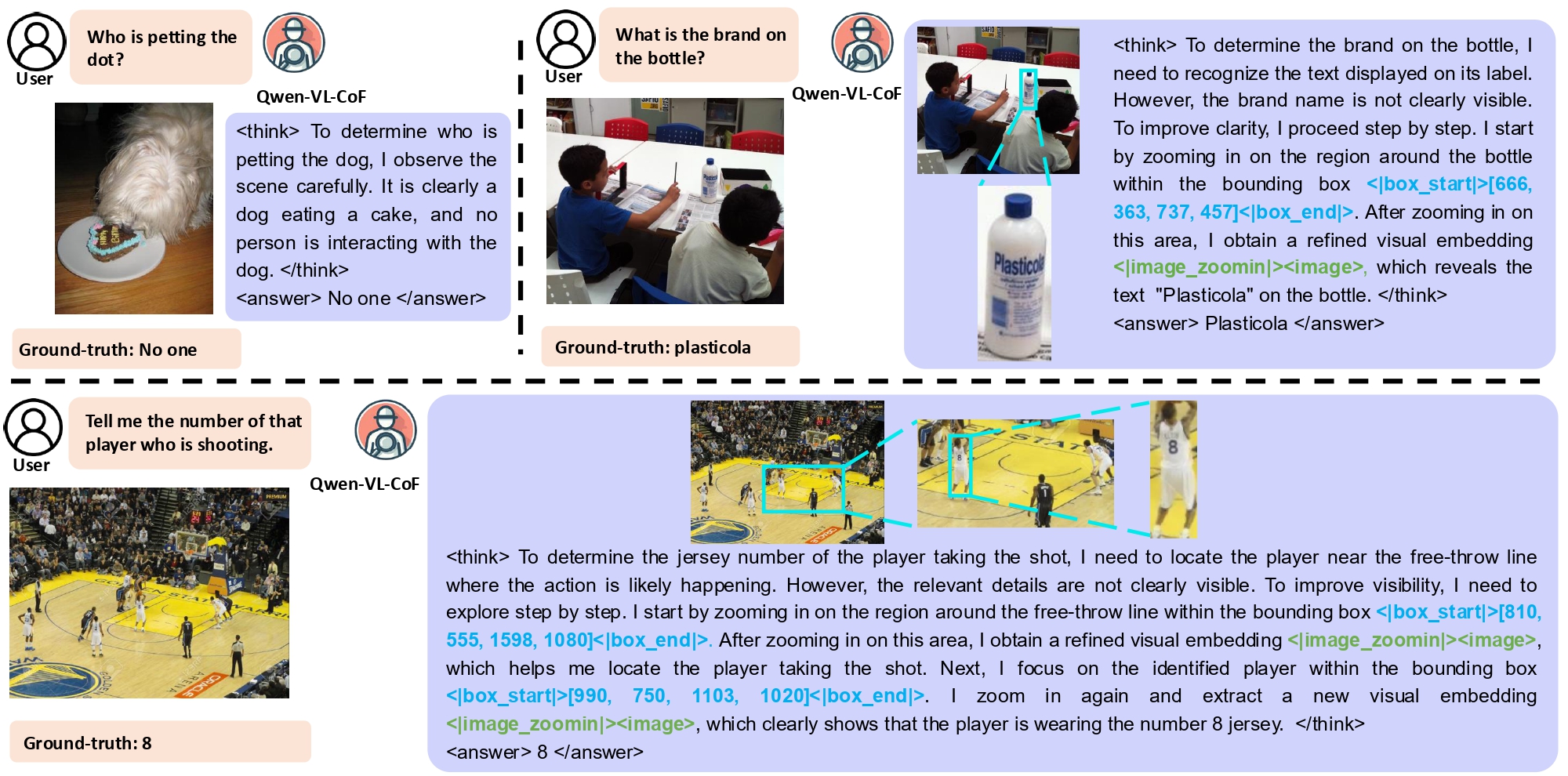

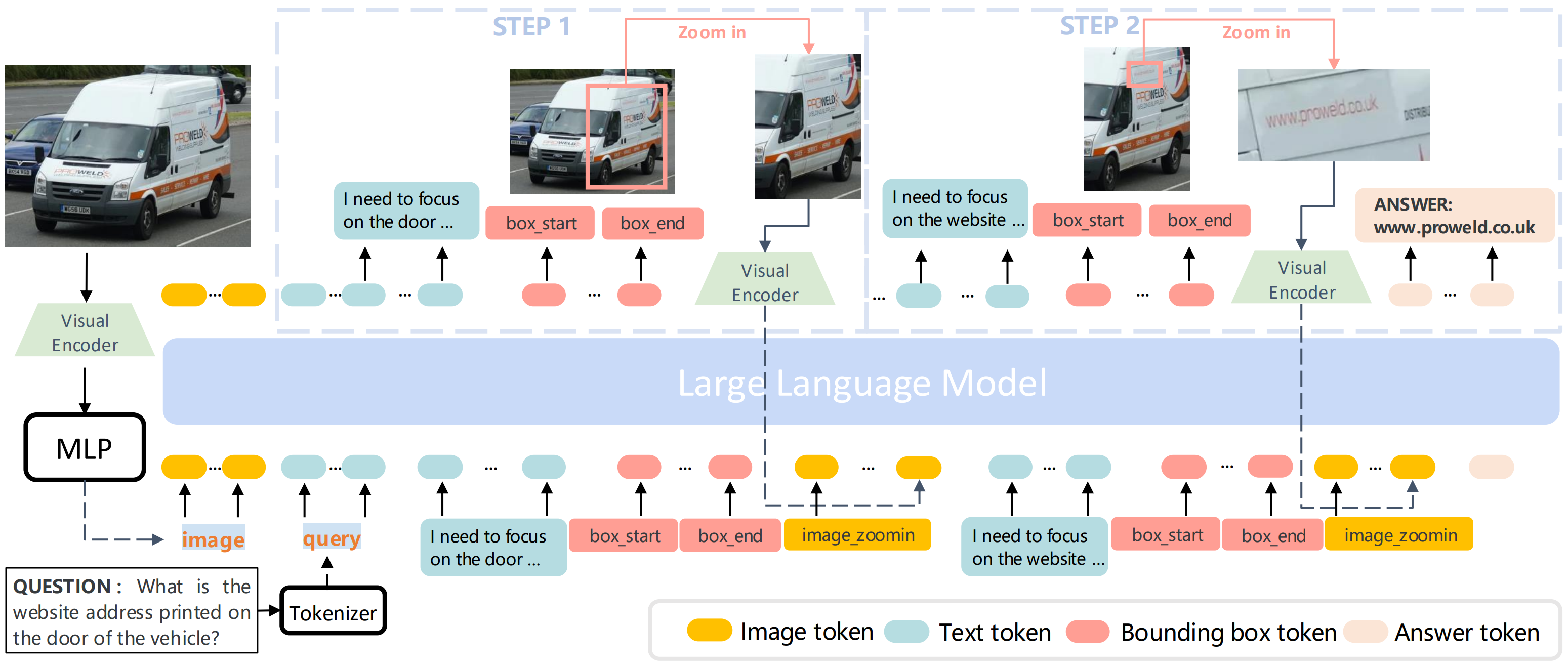

The Chain-of-Focus (CoF) method enables VLMs to perform adaptive search and zooming in on key image regions, thereby creating a chain of focus steps for multimodal reasoning with gradually obtained visual cues.

- When the given image is high-resolution and uses a large number of visual tokens, or when the question depends on a large region of the image, the extracted visual tokens are often sufficient to answer the question directly.

- When the image is low-resolution with fewer visual tokens, or when the question demands details from small regions of the image, the visual tokens may not provide enough cues. In this case, the model should search for and zoom in on key image regions to extract more visual cues.

In implementation, the visual tokens corresponding to key regions are appended to previously generated output tokens for subsequent outputs during a single generation round. This approach allows the VLMs to gather more visual cues, enabling them to analyze the image more thoroughly, accurately, and reliably than if they only relied on a static view of the image.

Note that our method does not perform visual search and zooming for every image, but performs adaptive search and zooming based on obtained visual cues, reducing the cost while keeping the performance.

CoF adopts a two-stage training pipeline.

In the SFT stage, we construct the MM-CoF dataset with 5K samples from the SAM dataset across diverse resolutions. For each image, we synthesize a task and use a visual agent with multiple tools to search and reason until task completion. The agent's reasoning steps are then summarized into a CoF process by an LLM. We fine-tune a Qwen2.5-VL-7B model on MM-CoF for cold start.

In the RL stage, we leverage the outcome accuracies and formats as rewards to update the VLMs, enabling further refining the model’s search and reasoning strategy without human priors. We denote the obtained model as Qwen2.5-VL-7B-CoF.

Performance

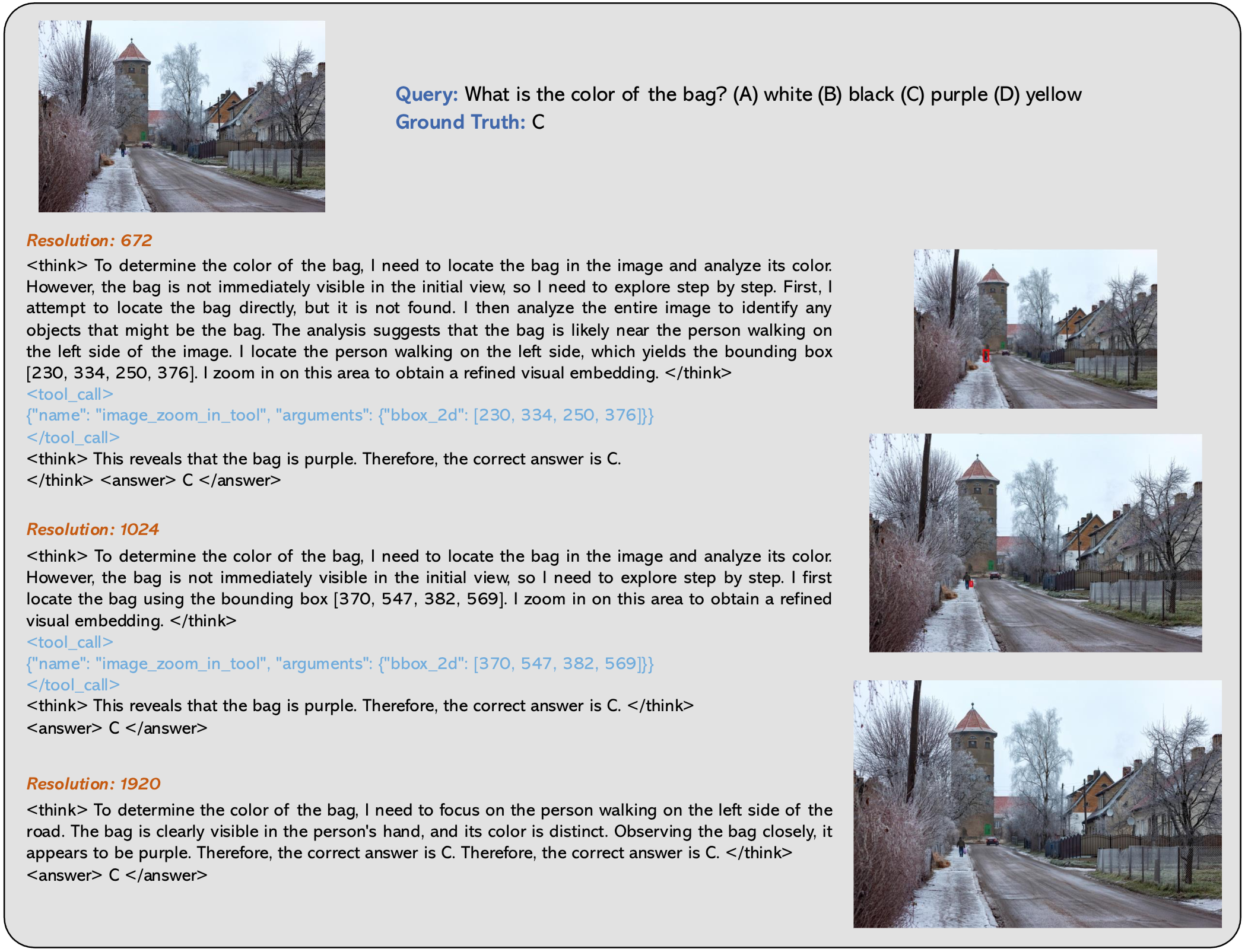

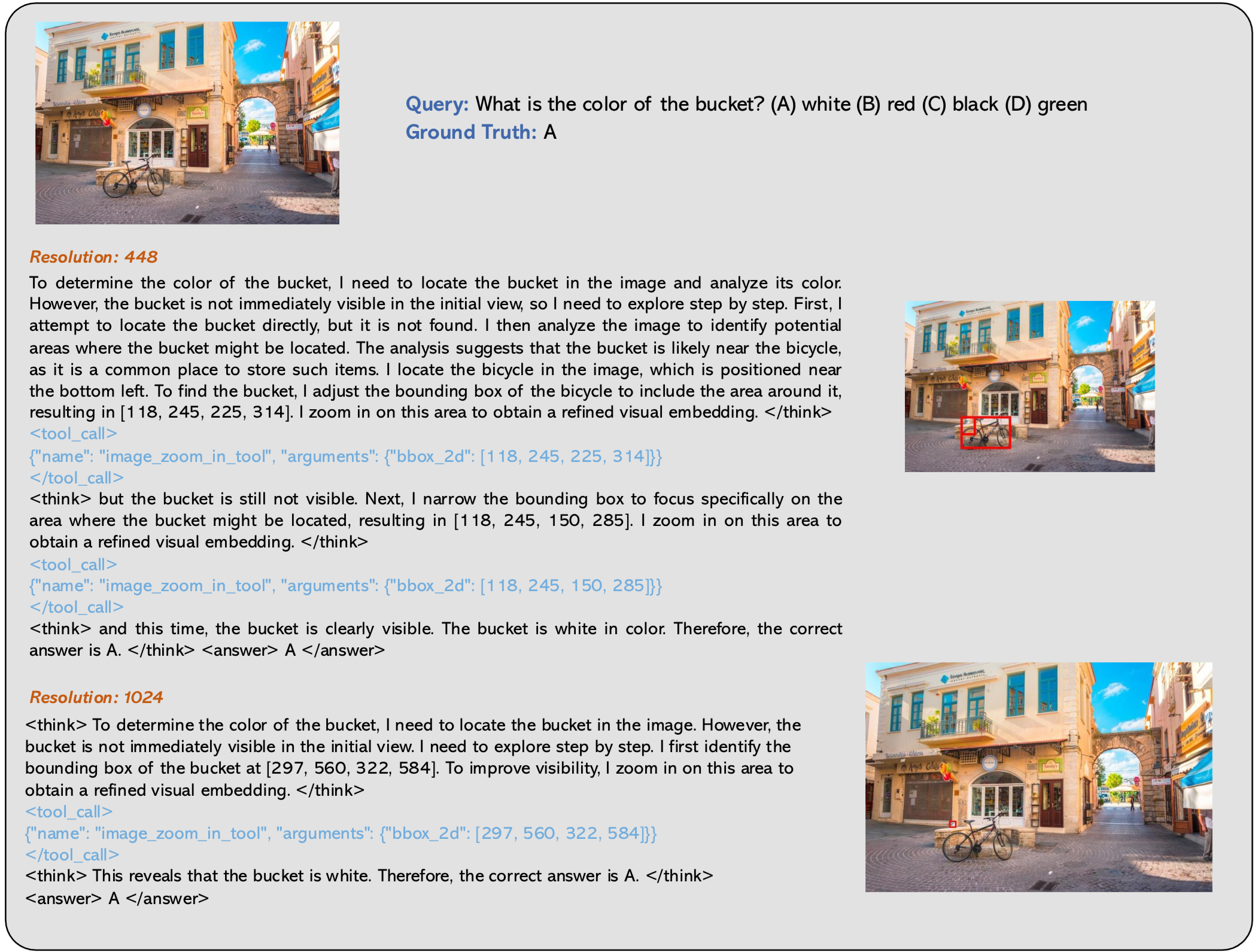

Adaptive Case Study

Visualized Reasoning Cases

Query: Is the color of the bus red or yellow?

(A) yellow

(B) red

Answer with the option's letter from the given choices directly.

Output:

<think> To determine the color of the bus, I need to closely observe the bus in the image. The bus is clearly visible, and its color can be seen distinctly as red. </think>

<answer> B </answer>

Query: What is the color of the woman's jacket?

(A) black

(B) red

(C) blue

(D) orange

Answer with the option's letter from the given choices directly.

Output:

<think> To determine the color of the woman's jacket, I need to closely examine the area where she is located. However, the color details are not immediately clear due to the distance and lighting conditions. To improve visibility, I proceed step by step. I first identify the bounding box corresponding to the woman. </think>

{

"name": "image_zoom_in_tool",

"arguments": {

"bbox_2d": [570, 1726, 615, 1848]

}

}

</tool_call>

<think> This reveals that the jacket is light blue. </think>

<answer> C </answer>

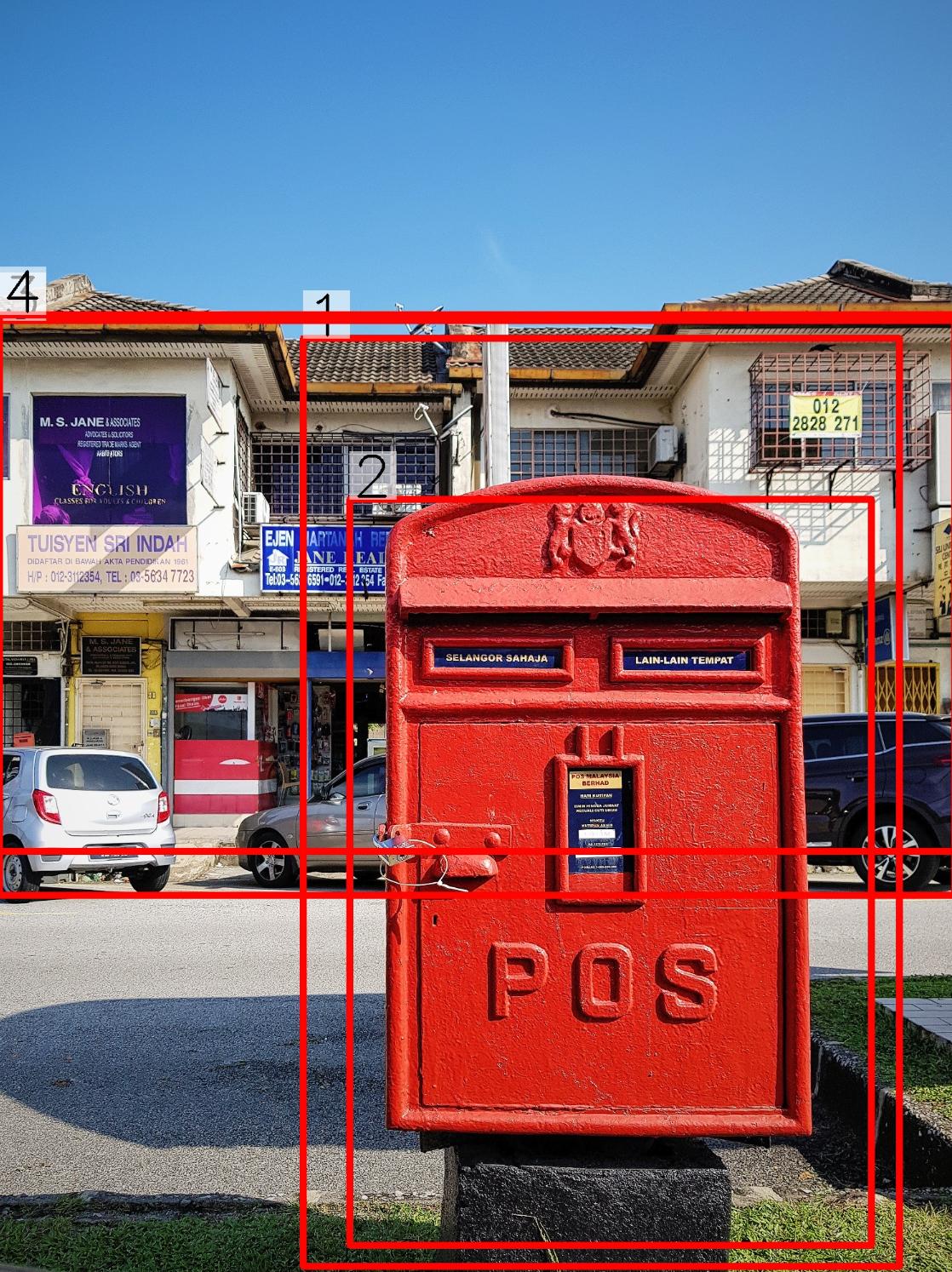

Query: What is the color of the lock?

(A) yellow

(B) silver

(C) golden

(D) red

Answer with the option's letter from the given choices directly.

Output:

<think> To determine the color of the lock, I need to closely examine the area where the lock is located. However, the lock is not immediately visible, making it difficult to identify its color. To improve visibility, I proceed step by step. I first attempt to locate the lock but find no clear indication due to its small size. I then focus on the red postbox, identifying two bounding boxes: [585, 700, 1305, 1821] and [645, 920, 1275, 1821]. I analyze these areas but still cannot locate the lock. Next, I shift my attention to the area above the postbox, locating two bounding boxes: [0, 600, 1425, 1241] and [0, 600, 1425, 1301]. I zoom in the boundingbox. </think>{

"name": "image_zoom_in_tool",

"arguments": {

"bbox_2d": [0, 600, 1425, 1241]

}

}

</tool_call>

<think> This reveals the presence of a mailbox.Upon analyzing this refined area, I confirm that the lock on the mailbox is silver. </think>

<answer> B </answer>

Conclusion

In this paper, we present adaptive chain-of-focus (Adaptive-CoF), a framework that enables VLMs to adaptively perform fine-grained visual search and zooming. Through a two-stage training pipeline combining supervised fine-tuning and reinforcement learning, Adaptive-CoF learns to balance detailed perception with computational efficiency, overcoming the trade-off between static viewing and exhaustive zooming. Experiments demonstrate state-of-the-art performance on challenging benchmarks with significantly reduced computational cost.

BibTeX